Coping with COPPA

February 10, 2020

It’s been said before that children are our future. President John F. Kennedy himself even argued that “children are the living messages we send to a time we will not see.” Protecting them has always been a priority, and this is a sentiment shared by the Federal Trade Commission (FTC). Recently, YouTube has been facing pressure from the FTC to respond to violations of the Children’s Online Privacy Protection Agency (COPPA), which led to the questionable usage of underage data for targeted advertising revenue. With tensions on the rise, will YouTube, both the platform and its creators, survive the aftermath?

YouTube utilizes gathered data to allow content creators to have targeted advertising on their videos. For example, if you regularly watch video game-related content, then you would be more likely to see ads for video games as opposed to Taylor Swift albums. YouTube will then transfer 55% of the earned revenue to the creator of the video. This model rewards content creators by allowing them to appeal to their primary audience and allows YouTube to gain useful advertisement data on their users. Google does something similar when you search the web, which explains those weirdly specific ads you see as you surf.

“COPPA is the Children’s Online Privacy Protection Act of 1998.” Upper School Teacher Darin Maier said, “Essentially, it’s designed to protect children under 13 from giving out personal information without their parents’ knowledge and consent, which impacts social media sites particularly and explains why most won’t allow someone they know to be under 13 years of age from setting up an account.”

In September 2019, YouTube was fined $170 million by the FTC for collecting personal information from minors under the age of 13 without parental consent. In particular, the FTC found that YouTube was liable under COPPA, as the website’s rating and content algorithms sometimes specifically targeted children. In order to comply with the settlement, YouTube was ordered to develop, implement, and maintain a system for content creators to specify if their content is directed to children.

YouTube also announced that it would invest $100 million over the next three years to support the creation of systems that would protect younger audiences with child-safe content.

This change has had mixed reactions. Parents specifically expressed gratitude at the increased efforts to protect the interests of children online.

“I am glad that platforms like YouTube are taking actions to regulate content and protect children. Developmentally, children do not always have the cognitive skills necessary to distinguish between regular content and advertising,” said Upper School teacher Emily Philpott. “However, I feel that leaving all of the monitoring to media companies is not sufficient. Parents still need to be involved so that children can learn how to safely navigate the internet.”

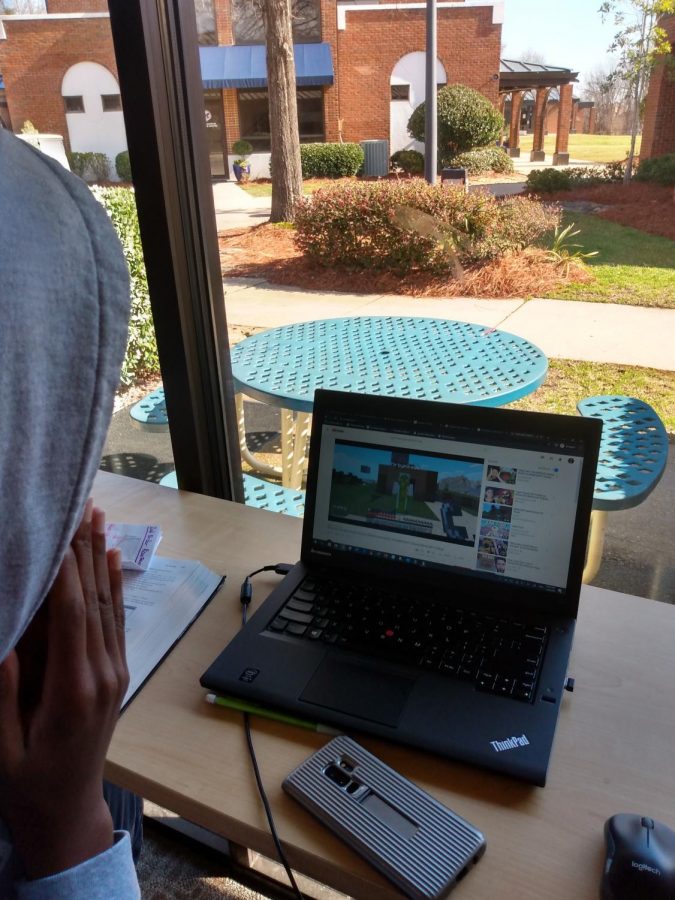

Content creators are faced with difficulties adapting to these new rules. YouTube’s primary action will be the demonization (deactivating ad revenue) of content that they believe targets children. The ambiguity of what qualifies as children’s content makes it difficult to know if you need to alter your content or make an appeal.

“If a creator loses targeted ads on their videos, they lose about 90% of their ad revenue. Most people couldn’t take a hit like that to their salary,” said Youtuber Matthew Patrick. “When looking at what the FTC is worried about, a lot of YouTube content falls into a weird grey area.”

Overall, it’s looking rather bleak for content creators as most nonviolent video game and cartoon channels will be stripped of their financial revenue stream. Children are definitely our future, but hopefully, we can protect them without jeopardizing our present.